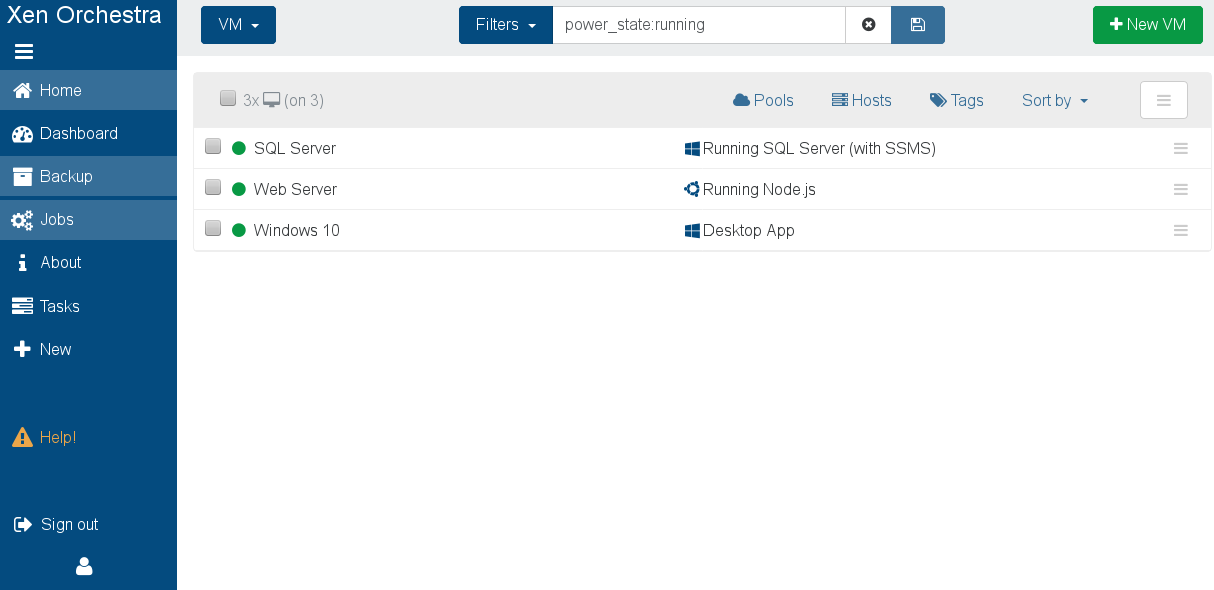

Up until now, students have had access to creating Virtual Machines (VMs) on the Dundalk Institute of Technology network via our Xen Orchestra Appliance (XOA) at http://xoa.comp.dkit.ie. But VMs in the real world are rapidly being replaced by “containers”, so it’s time to take things to a whole new level.

“Docker is a top-rated server solution on the market. Businesses are adopting its use at a remarkable rate as the move from virtual machines (VM) to containers becomes preferable; therefore, the skill of managing Docker is becoming increasingly desirable in potential employees.”

Eolas Recruitment, Dublin

What Are Virtual Machines (VMs) again?

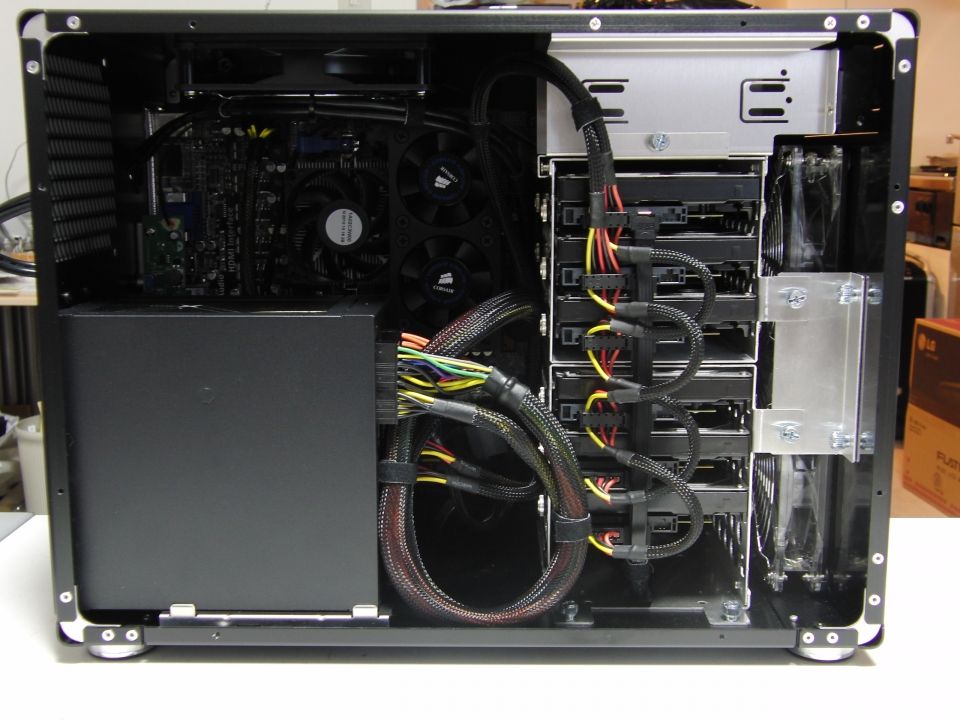

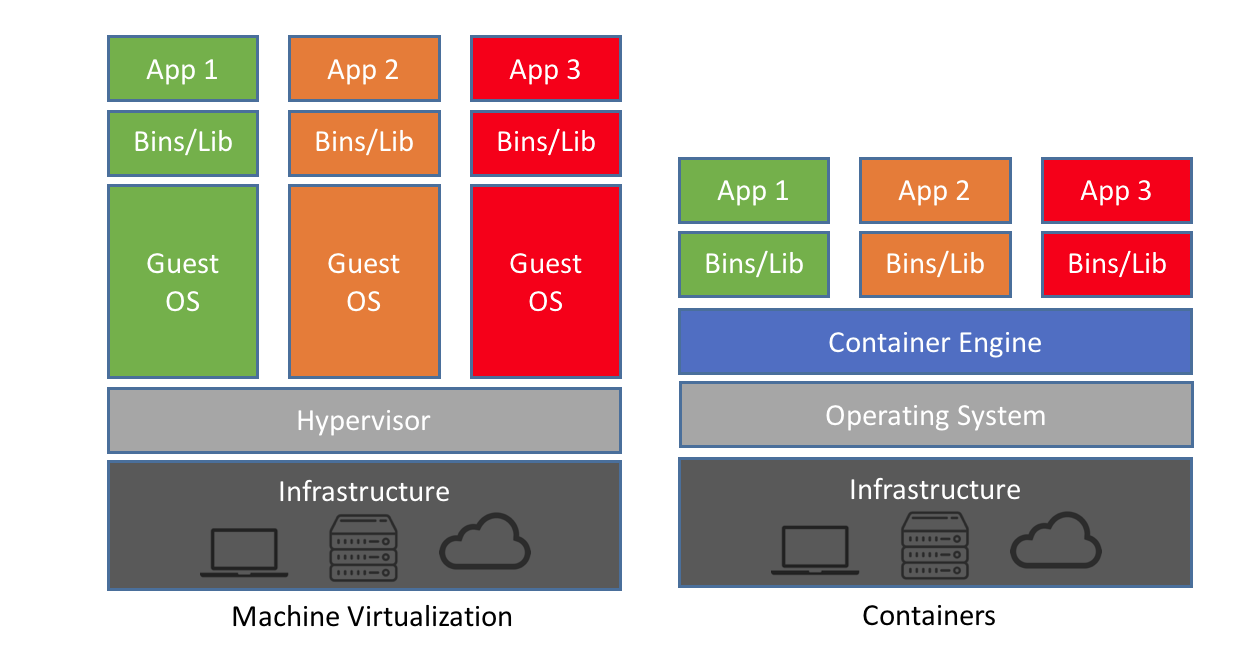

Historically, as server processing power and capacity increased, bare metal applications weren’t able to exploit the new abundance in resources. Thus, VMs were born, designed by running software on top of physical servers to emulate a particular hardware system. A hypervisor, or a virtual machine monitor, is software, firmware, or hardware that creates and runs VMs. It’s what sits between the hardware and the virtual machine and is necessary to virtualize the server.

Within each virtual machine runs a unique guest operating system. VMs with different operating systems can run on the same physical server – a UNIX VM can sit alongside a Linux VM, and so on. Each VM has its own binaries, libraries, and applications that it services, and the VM may be many gigabytes in size.

Server virtualization provided a variety of benefits, one of the biggest being the ability to consolidate applications onto a single system. Gone were the days of a single application running on a single server. Virtualization ushered in cost savings through reduced footprint, faster server provisioning, and improved disaster recovery (DR), because the DR site hardware no longer had to mirror the primary data center.

Development also benefited from this physical consolidation because greater utilization on larger, faster servers freed up subsequently unused servers to be repurposed for QA, development, or lab gear.

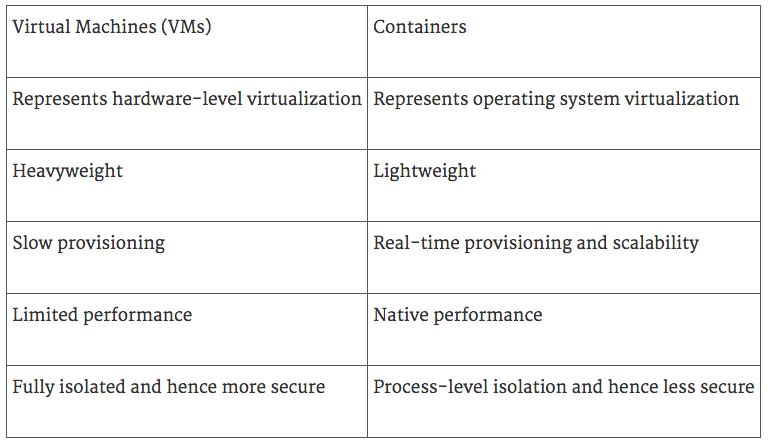

But this approach has had its drawbacks. Each VM includes a separate operating system image, which adds overhead in memory and storage footprint. As it turns out, this issue adds complexity to all stages of a software development lifecycle – from development and test to production and disaster recovery. This approach also severely limits the portability of applications between public clouds, private clouds, and traditional data centers.

Up until now we have been creating VMs via https://xoa.comp.dkit.ie

However, quite often we have likely only needed to run a single service, using much less resources and actually only needing to exist for a short period of time. This is where containers come in.

What Are Containers?

Operating system (OS) virtualisation has grown in popularity over the last decade to enable software to run predictably and well when moved from one server environment to another. But containers provide a way to run these isolated systems on a single server or host OS.

Containers sit on top of a physical server and its host OS – for example, Linux or Windows. Each container shares the host OS kernel and, usually, the binaries and libraries, too. Shared components are read-only. Containers are thus exceptionally “light”—they are only megabytes in size and take just seconds to start, versus gigabytes and minutes for a VM.

Containers also reduce management overhead. Because they share a common operating system, only a single operating system needs care and feeding for bug fixes, patches, and so on. This concept is similar to what we experience with hypervisor hosts: fewer management points but slightly higher fault domain. In short, containers are lighter weight and more portable than VMs.

To summarise:

The last one of course could be solved by running your own docker instance inside a VM! See notes at end of this article.

Say Hello To Docker

Docker is a tool designed to make it easier to create, deploy, and run applications by using containers. Containers, as you know by now, allow a developer to package up an application with all of the parts it needs, such as libraries and other dependencies, and ship it all out as one package. By doing so, thanks to the container, the developer can rest assured that the application will run on any other Linux machine regardless of any customized settings that machine might have that could differ from the machine used for writing and testing the code.

In a way, Docker is a bit like a virtual machine. But unlike a virtual machine, rather than creating a whole virtual operating system, Docker allows applications to use the same Linux kernel as the system that they’re running on and only requires applications be shipped with things not already running on the host computer. This gives a significant performance boost and reduces the size of the application.

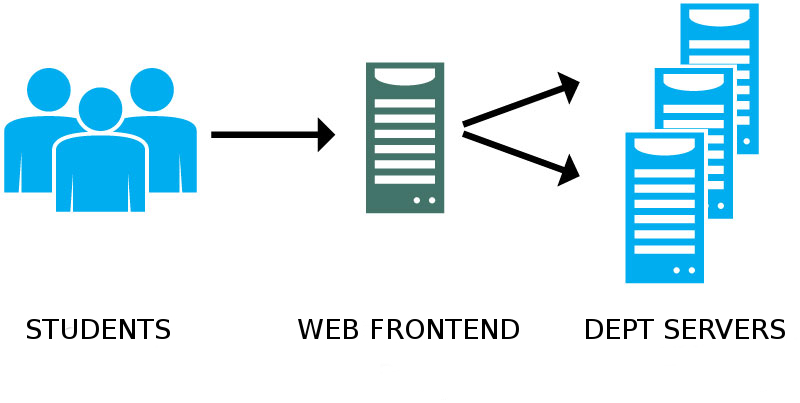

While very powerful, Docker is also incredibly complex and requires both access to the server command line AND an intimate understanding of Linux – two things students generally do not have. For this reason, we will be using a web frontend with LDAP authentication to allow students full access to our Docker infrastructure, much in the same way you can access our backend XenServer infrastructure via the frontend Xen Orchestra Dashboard.

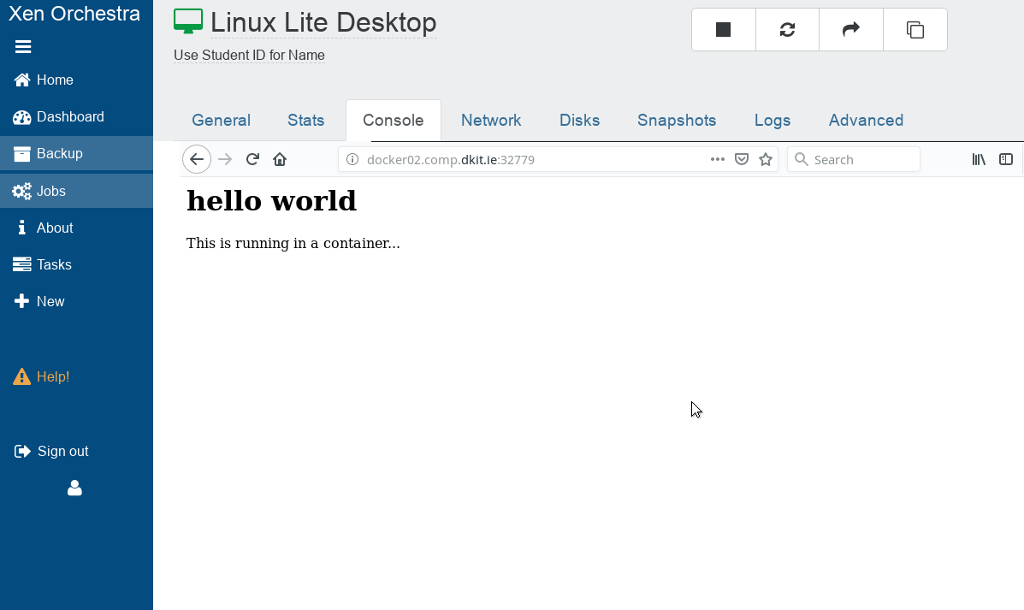

The frontend for Docker is found at https://.docker.comp.dkit.ie, and like with XOA, you can login but will need to then request permissions to be added to your account. Also like XOA, the web frontend is used mostly for managing your containers. You still need to SSH or web to your containers to work with it (don’t forget to also install the actually ssh server component!), which can be done just fine inside DkIT (outside the college we recommend firing up a Linux Lite VM via http://xoa.comp.dkit.ie and using that as your client machine).

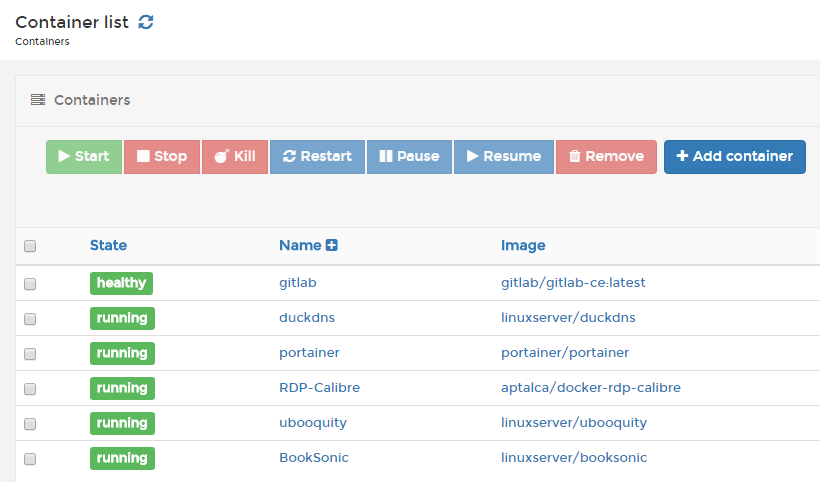

There is also a web console available for each container via the portainer.io web frontend we are using (and running as a container itself! #mindblown)

We have provided a locally registry to store user images, and also create container templates (like we created VM templates in XOA) to make things easier (and quicker). There’s a lot to explain to you, so we will add multiple tutorials over the coming days to cover the following topics:

- Creating a Private Network for Your Docker Containers

- Building an Image from a Dockerfile (and adding to the DkIT Registry)

- Creating a Docker Container from a Pulled Image

- Using Pre-Built Docker Container Templates

- Accessing Your Docker Containers on your Private Network

- Accessing Your Docker Containers Remotely

It’s going to be a whole lot of fun, and ultimately incredibly beneficial to your as a future developer.

Note: If you’d like to learn about Docker at the command line, there is a “Docker Host (with Dockly)” template inside XOA. You can play around with and learn a few extra things (we’ve add the amazing dockly tool for you), but you’ll need some reference material – there’s way too much for us to cover in this tutorial series and we will be supporting/promoting the web frontend (powered by portainer.io) only going forward (mostly).

However, the book we used and are going to recommend is Using Docker by Adrian Mouat (O’Reilly Press).

It’s really, really good and can be bought online second hand. You should also have a look at this article.